PixVerse R1: The World's First General-Purpose Real-Time World Model

Aishi Technology has officially unveiled PixVerse R1, marking the industry's transition from static AI video generation to dynamic, real-time interactive simulations. Unlike traditional "prompt-and-wait" models, R1 builds a reactive digital world that responds instantly to user intent.

PixVerse R1 Showcase: Experience real-time latency and fluid world interaction.

What defines a "World Model"?

If traditional AI video generators (like Sora) follow a linear "filming" path, PixVerse R1 focuses on building a stateful environment. It understands the underlying physics, spatial logic, and causal relationships of a scene.

This paradigm shift allows for "Playable Reality"—where the video is no longer a fixed file, but a persistent process you can interact with. You can change the "plot" or "scene" as it unfolds, and the AI adapts the visual stream instantly in 1080P resolution.

Technical Breakdown: The Three Pillars

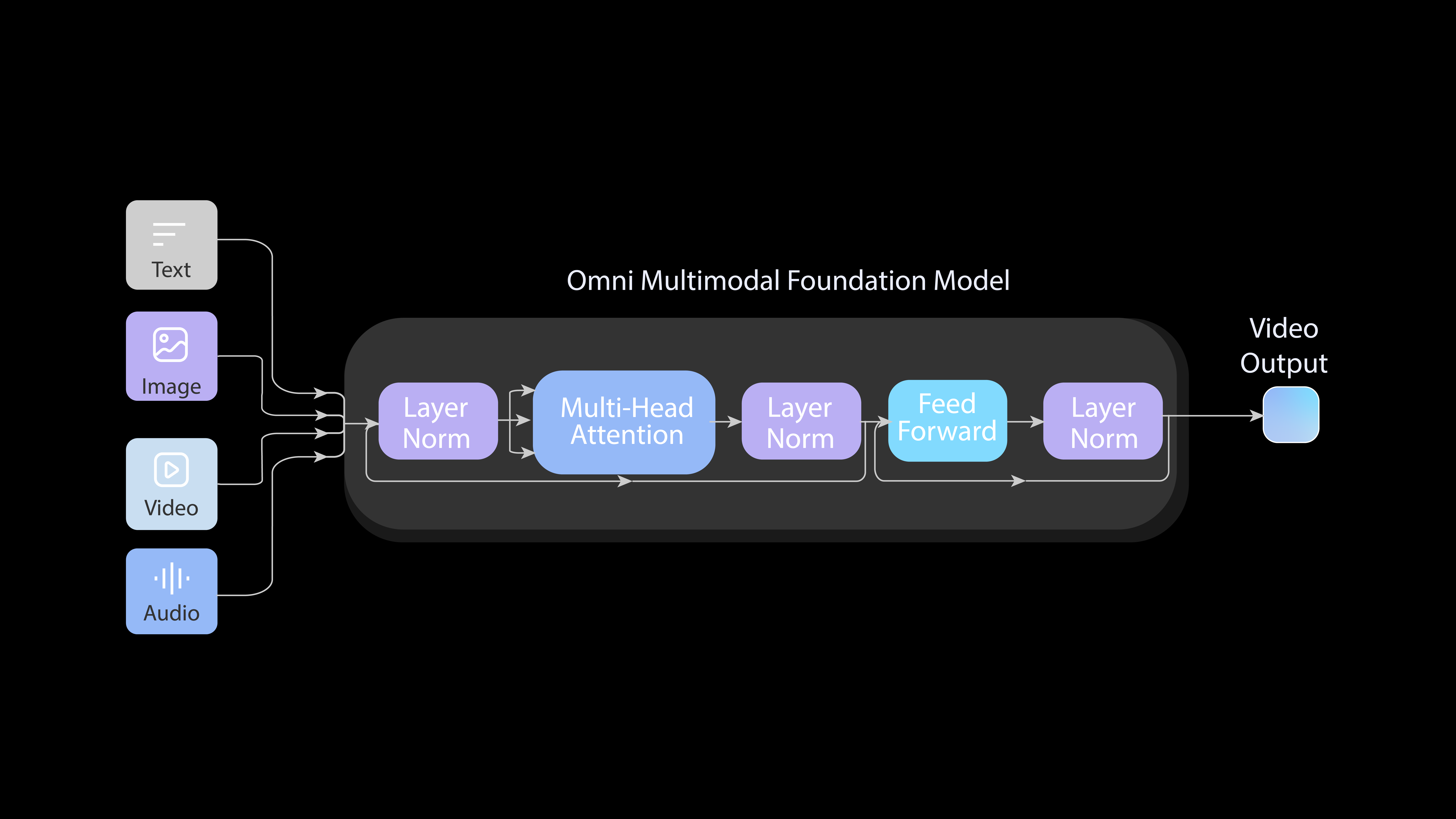

R1's breakthrough is powered by a unified architecture that eliminates traditional media processing silos.

1. Omni: Native Multimodal Foundation

The Omni-model unifies text, image, video, and audio into a singular token stream. This allows the system to process arbitrary multi-modal inputs within a single Transformer framework.

- End-to-End Training: Prevents error propagation found in chained models.

- Native Resolution: Avoids blurriness or artifacts from cropping/upsampling.

- World Physics: Learns intrinsic physical laws from massive real-world video datasets.

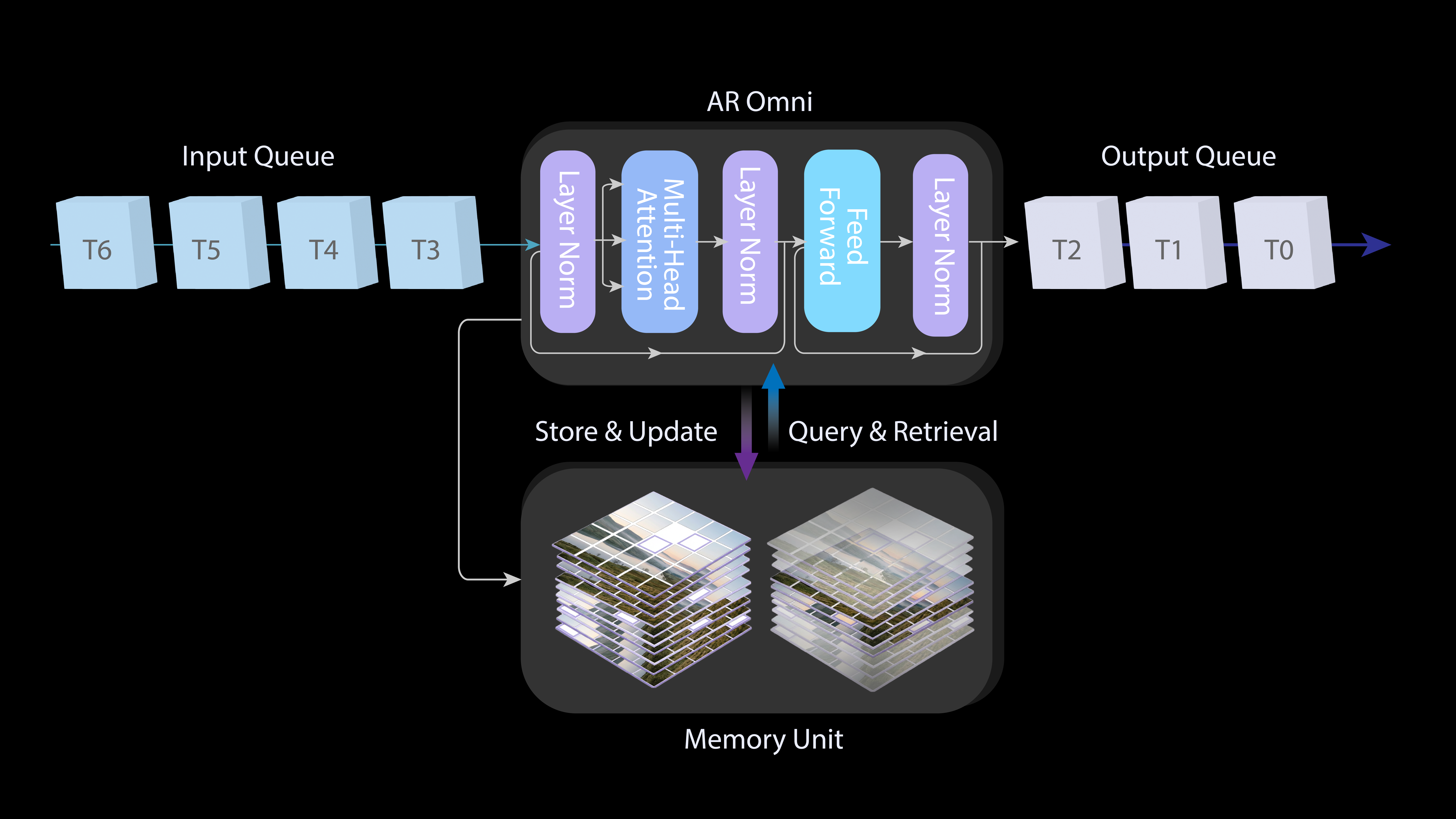

2. Memory: Infinite Streaming & Consistency

Standard models are limited to short, fixed clips. R1 uses an Autoregressive Mechanism with Memory-Augmented Attention to ensure long-term stability.

- Infinite Sequences: Sequentially predicts frames for unbounded visual streaming.

- Temporal Consistency: Characters and environments remain stable even over long durations.

- Contextual Awareness: Conditions the current frame on the entire preceding sequence.

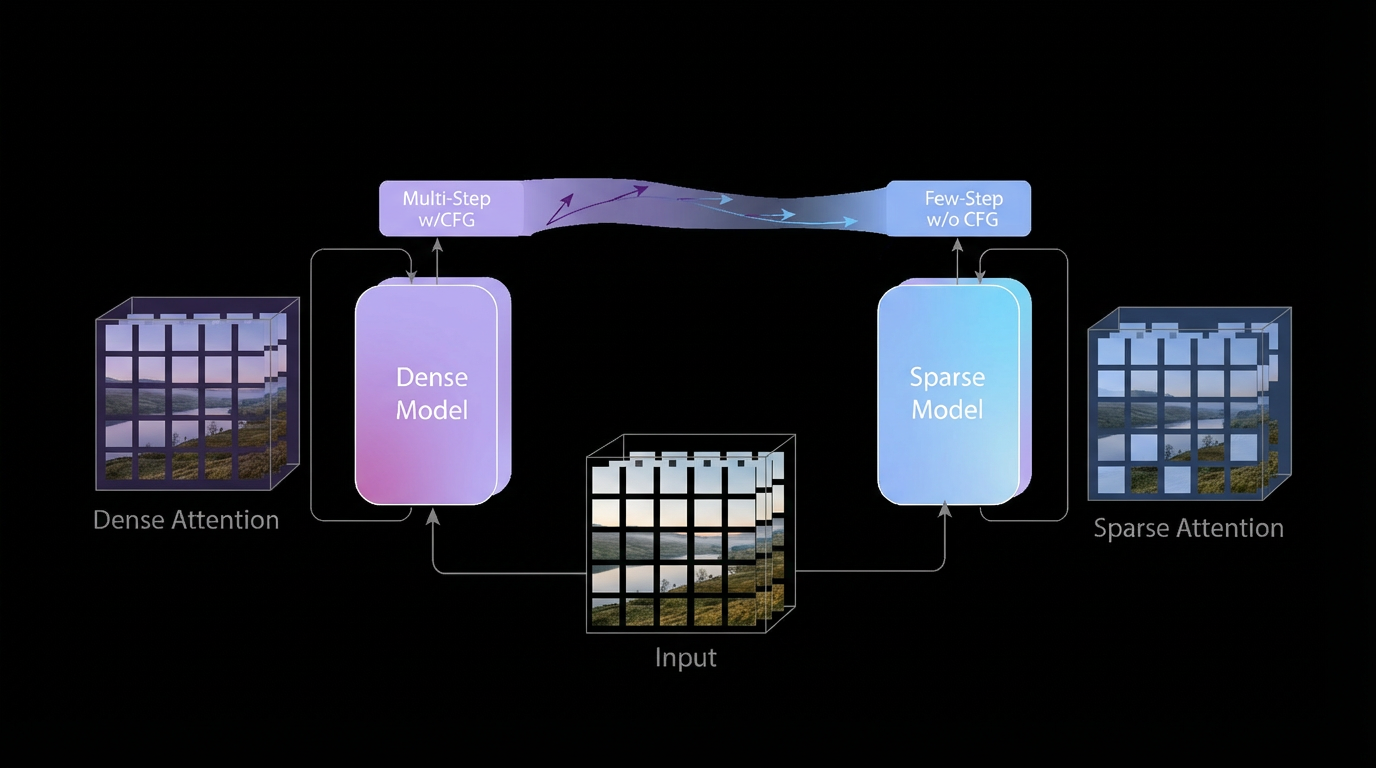

3. IRE: Instantaneous Response Engine

Achieving 1080P in real-time requires extreme optimization. The IRE re-architects the sampling pipeline to deliver ultra-low latency.

- Trajectory Folding: Compresses sampling from 50+ steps down to just 1–4 steps.

- Guidance Rectification: Merges conditional gradients into the student model directly.

- Sparse Attention: Dynamically allocates computing resources, increasing efficiency by hundreds of times.

Unlocking New Creative Frontiers

AI-Native Gaming

NPCs and environments that evolve dynamically in response to player choices, creating unique, non-scripted worlds.

Interactive Cinema

Films where the plot and visuals adapt to viewer input (voice or gesture), blurring the line between audience and director.

Immersive Simulation

Real-time VR/XR training environments, scientific scenario exploration, and industrial simulations.

Comparison & Roadmap

| Capability | Traditional AI Video | PixVerse R1 |

|---|---|---|

| Latency | Seconds to Minutes | Instantaneous |

| Stream Length | Fixed Clips (e.g., 5s-10s) | Infinite & Continuous |

| Interactive Mode | One-way Prompting | Real-Time Dialogue-based |

Current Constraints & Roadmap

- Resolution: Currently testing at 480P, with 720P and full 1080P rollout expected soon.

- Transitions: Smoothing out hard cuts and maintaining character identity consistency over extreme durations.

- Accessibility: Currently invitation-only due to high computational server costs.

Explore the R1 Community

Frequently Asked Questions

What is Aishi Technology's background?

Founded by CEO Wang Changhu, Aishi Technology has reached over 100 million users and recently secured a $60 million Series B funding led by Alibaba to scale world model production.

How does it compare to Runway GWM-1?

While Runway GWM-1 focuses on environment and robotics simulation, PixVerse R1 is optimized for high-fidelity 1080P media and entertainment applications, providing a specialized "Instantaneous Response Engine" for creators.

Can I create my own themes?

Yes, the platform supports custom themes where you can define the aspect ratio, art style, and core narrative parameters for your real-time world.